In today’s evolving world, where artificial intelligence is transforming our lives, jobs and recreational activities the term “neural network” has become increasingly common when discussing cutting edge technology. From powering vehicles to enhancing diagnoses neural networks have proven to be a driving force, behind some of the most remarkable advancements of our time.

Have you ever wondered about the workings of networks and how they perform their tasks? If so, you’ve landed in the spot. In this blog post we’ll delve into the world of networks. Explore their capabilities that mimic the human brain. It’s going to be a journey! We’ll demystify their complexities. Explore the ways in which they shape our digital landscape.

Whether you’re a data scientist, an AI enthusiast or simply someone intrigued by the potential of artificial intelligence join us as we uncover the layers of neural networks and unveil their immense possibilities for our future. Get ready to dive into the realm of intelligence. Discover how neural networks are revolutionizing innovation by enabling machines to perform tasks that were once exclusively done by humans.

Overview of Neural Networks

Definition of Neural Networks

Artificial neural networks, also known as networks, are computer models inspired by the structure and functioning of the brain. Their purpose is to replicate the brain’s ability to process information and learn.

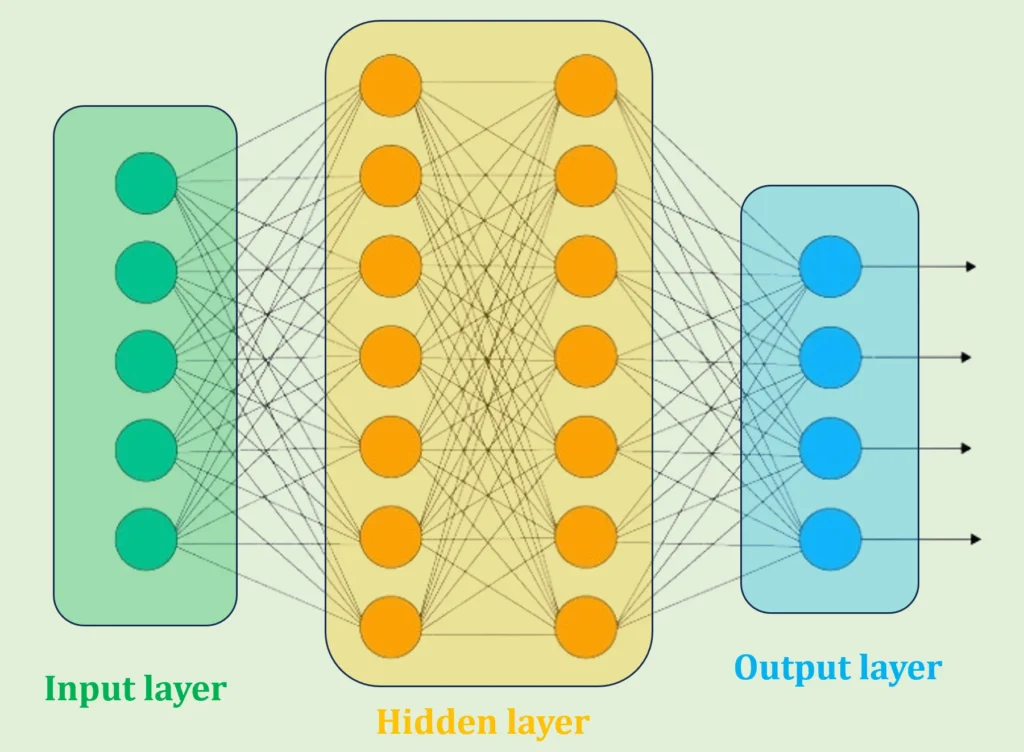

Neural networks consist of interconnected units called neurons that are organized into layers. These layers collaborate to perform calculations and make predictions based on input data. The output, from one layer serves as the input for the layer allowing for extraction of features and patterns.

The origins of networks can be traced back to the 1940s and 1950s when early pioneers like Warren McCulloch and Walter Pitts introduced the concept of an artificial neuron. However due to limitations in power and data accessibility progress in networks was slow until recent advancements, in technology.

Biological Inspiration Behind Neural Networks

The human mind functions, as a computing system, with the ability to handle volumes of data and make intricate choices. Neurons, the components of the brain transmit signals and create elaborate networks that facilitate cognitive abilities.

Neural networks draw inspiration from the brain’s structure and functioning. Like neurons in the brain, artificial neurons in neural networks receive inputs, perform computations using activation functions, and produce outputs. The ability of neural networks to mimic the brain’s computational capabilities allows them to solve complex tasks that traditional algorithms struggle with.

Advantages of Neural Networks

Neural networks offer several advantages that contribute to their growing popularity in various fields:

- Parallel processing for efficient computation: Neural networks can process multiple inputs simultaneously, enabling highly efficient computations. This parallel processing capability significantly speeds up tasks such as image recognition or natural language processing.

- Self-learning and adaptability: Neural networks have the ability to learn from data and adapt their parameters to optimize performance. During the training process neural networks undergo adjustments, to their neuron weights and biases in order to reduce errors and enhance accuracy.

- Wide range of applications and use cases: Neural networks have a range of applications in domains, such as image classification, natural language processing, speech recognition, autonomous vehicles, healthcare diagnostics and finance. Their ability to adapt and be flexible makes them well suited for tackling problems, across industries.

Fundamentals of Neural Networks

Neurons and Activation Functions

In neural networks, neurons act as basic computational units. These neurons receive inputs, weigh them based on associated weights, and pass them through an activation function to produce an output. The choice of activation function greatly impacts the network’s learning and decision-making abilities. Popular activation functions include:

- Sigmoid function: It maps the input to a range between 0 and 1. This activation function is commonly used in binary classification problems.

- ReLU (Rectified Linear Unit): It is a piecewise linear function that outputs the input directly if it is positive, and zero otherwise. ReLU is widely used in deep learning architectures due to its ability to mitigate the vanishing gradient problem.

Learning in Neural Networks

Neural network learning may be divided into three primary categories:

- Supervised learning: Labeled datasets are used to train the neural network in this kind of learning. The network learns to map input features to the correct output based on the provided labels. Supervised learning is frequently applied to regression and classification applications.

- Unsupervised learning: In this method, neural networks are trained on unlabeled datasets and left to find significant patterns and connections on their own, without the need for human intervention. For dimensionality reduction and grouping, unsupervised learning is frequently utilized.

A neural network could be able to learn how to function in a way that optimizes rewards through interactions with its surroundings. Reinforcement learning is the name for this procedure. AI in video games and robotics both frequently employ this kind of learning.

Layers and Architectures

Neural networks are organized into layers, each serving a specific purpose in information processing. The three main types of layers are:

- Input layer: This layer receives the initial input data and passes it to the subsequent layers for processing.

- Hidden layer(s): These layers are positioned in between the output and input layers. They perform computations and feature extraction, helping the network learn and make predictions.

- Layer of output: Using the data processed by the layers before it, the network’s last layer generates the intended output.

Neural networks with several hidden layers are called deep neural networks (DNNs). Because of its design, complicated characteristics may be represented hierarchically, allowing the network to pick up high-level abstractions. In domains, such, as computer vision and natural language processing, Deep Neural Networks (DNNs) have accomplished achievements.

Convolutional Neural Networks (CNNs) are a kind of network that find application, in tasks related to image recognition. They employ convolutional layers to perform localized feature extraction, enabling effective analysis of visual data.

Recurrent neural networks (RNNs) are another type of neural network that exhibits temporal behavior. They are suitable for analyzing sequential data, such as speech recognition and natural language processing. Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are popular architectures within RNNs that allow them to model long-term dependencies and overcome the vanishing gradient problem.

Weights and Biases

The weights and biases in a neural network determine the strength of connections between neurons and influence the network’s decision-making process. During training, these parameters are adjusted iteratively using optimization algorithms, such as gradient descent, to minimize the error between predicted and actual outputs.

The weights control the impact of each input on the output of a neuron, while biases provide an additional value that influences the activation function. Proper initialization and fine-tuning of weights and biases are essential for achieving optimal performance in neural networks.

Different types of networks exist, each, with purposes and applications. Some used neural network architectures include:

- Feedforward Neural Networks (FNN): FNNs are the type of networks, with interconnected layers that process information in one direction from the input to the output layer. They are often utilized in tasks like classification and regression.

- Convolutional Neural Networks (CNN): CNNs are specialized in image recognition tasks. These networks utilize convolution to extract features from image inputs making them highly effective for tasks such as object detection and image classification.

- Recurrent Neural Networks (RNN): RNNs are designed to handle data, such, as text or time series data, where the order of input elements is significant. They employ feedback connections that enable them to work with variable-length input sequences.

- Long Short-Term Memory (LSTM): LSTM is a variation of RNN that addresses the vanishing gradient problem. It introduces gated cells that can retain information over more extended periods, making it ideal for applications involving long-term dependencies or time-series analysis.

- Gated Recurrent Unit (GRU): Similar to LSTM, GRU is another variation of RNN that addresses the vanishing gradient problem. It combines the idea of memory cells and gating mechanisms to capture long-term dependencies.

- Autoencoders: Autoencoders are a type of networks that have the purpose of understanding and encoding input data in a manner. These networks comprise of a decoder, which reconstructs the input and an encoder, which learns to create a representation.

- Generative Adversarial Networks (GAN): A GAN comprises of two networks. A generator and a discriminator, which are trained in an environment. The discriminator assesses the veracity of the created data, whereas the generator creates synthetic data. For tasks like data augmentation and picture creation, GANs are commonly employed.

- Self-Organizing Maps (SOM): SOMs are neural networks that create low-dimensional representations of high-dimensional input data through unsupervised learning. They come in handy for jobs like outlier identification, visualization, and grouping.

- Radial Basis Function Networks (RBFN): RBFNs are feedforward neural networks that use radial basis functions as activation functions. They have applications in function approximation and pattern recognition.

Training and Optimization of Neural Networks

Backpropagation Algorithm

The backpropagation algorithm is a crucial component of training neural networks. It enables the network to adjust its weights and biases by propagating error information backward from the output layer to the input layer. The network may improve its predictions and learn from its failures through this approach. The backpropagation algorithm involves three main steps:

- Error estimation and gradient descent: The algorithm computes the difference between predicted outputs and actual outputs and calculates the gradients of this error with respect to the network’s weights and biases. The gradients indicate the direction and magnitude of adjustments needed to minimize the error.

- Update rules for network parameters: Using the calculated gradients, the algorithm updates the weights and biases of the network by multiplying the gradients with a learning rate. The learning rate determines how quickly or slowly the network adapts to the gradients.

- Role of backpropagation in training neural networks: As the backpropagation algorithm iteratively updates the network’s parameters, the network gradually learns to minimize the error between its predicted outputs and the actual outputs. This process is known as training and results in a network capable of making accurate predictions on unseen data.

Regularization Techniques

Overfitting, a common problem in neural networks, occurs when the network becomes too specialized in the training data and performs poorly on new, unseen data. Regularization techniques help prevent overfitting and improve the network’s generalization abilities.

- Preventing overfitting with regularization: Regularization techniques add a regularization term to the network’s loss function, penalizing overly complex models. This encourages the network to prioritize simpler solutions that generalize well to unseen data.

- L1 and L2 regularization methods: L1 and L2 regularization are two popular regularization methods. L1 regularization adds the absolute value of weights to the loss function, encouraging sparsity and reducing the impact of less important features. L2 regularization adds the squared weights to the loss function, encouraging smaller weights and reducing the impact of outliers.

- Dropout regularization for improved generalization capabilities: Dropout regularization randomly drops a certain proportion of neurons and their associated connections during training, forcing the network to learn redundant representations. This technique improves the network’s generalization abilities by reducing reliance on specific neurons and mitigating overfitting.

Hyperparameter Tuning

Hyperparameters play a role, in the performance and behavior of networks. Unlike learned parameters these settings are not determined through training. They greatly impact how the network operates.

The significance of hyperparameters in networks is evident as they govern aspects such as network architecture, learning rate, dropout rate among others. Tuning these hyperparameters is essential, for optimizing networks to handle specific tasks. The selection of appropriate hyperparameters significantly impacts the network’s learning capacity, convergence rate, and generalization abilities.

Several techniques exist for hyperparameter optimization, including grid search and random search. Grid search systematically evaluates different combinations of hyperparameter values within predetermined ranges. Random search, on the other hand, randomly samples different hyperparameter configurations. Both methods aim to find the best-performing set of hyperparameters based on predefined evaluation metrics.

Feedforward Propagation

Feedforward propagation is the process that occurs in neural networks when new, unseen data is passed through the network for prediction. This process involves forwarding the input data through the network’s layers, applying the learned weights and biases, and producing the final output.

During feedforward propagation, each neuron receives input from the previous layer, performs the necessary computations using the activation function and its associated weights and biases, and passes the output to the next layer. This sequential passing of information through the network accounts for its ability to process complex data and make accurate predictions.

Applications of Neural Networks

Neural networks have a wide range of applications across various industries due to their ability to perform complex tasks that traditional algorithms struggle with. Some of the notable applications include:

Image Classification and Object Recognition

Neural networks, especially CNNs, have revolutionized image classification and object recognition. By leveraging the hierarchical feature extraction capabilities of CNNs, computers can accurately analyze and classify images, enabling applications such as face recognition, autonomous driving, and security systems.

Natural Language Processing

Natural language processing (NLP) is the field of teaching computers to comprehend and produce language. Neural networks, RNNs and their variations, like LSTM have greatly enhanced the effectiveness of NLP tasks like analyzing sentiments translating languages and building question answering systems.

Speech and Audio Processing

Neural networks play a crucial role in speech and audio processing, including speech recognition, speaker identification, and music generation. RNNs and CNNs are commonly employed for tasks like automatic speech recognition and music genre classification, bringing substantial improvements in accuracy and efficiency.

Autonomous Vehicles

Neural networks are at the core of autonomous vehicle technologies. They enable systems to recognize objects, interpret real-time sensor data, and make informed decisions to ensure safe and efficient navigation. Autonomous vehicles heavily rely on CNNs for tasks like object detection, lane detection, and traffic sign recognition.

Healthcare and Medical Diagnostics

Neural networks have demonstrated enormous promise for drug development, illness prediction, and medical diagnostics in the healthcare industry. Neural networks can help with early sickness diagnosis, medical picture categorization, and treatment plan personalization by utilizing vast datasets and sophisticated designs.

Finance and Stock Market Prediction

Neural networks have made significant contributions to financial applications, including stock market prediction, fraud detection, and credit scoring. With the ability to process large amounts of financial data and identify intricate patterns, neural networks offer insights and predictions that aid in making informed financial decisions.

Game AI and Reinforcement Learning

Game AI heavily relies on neural networks and reinforcement learning techniques to create sophisticated gameplay experiences. Neural networks can learn optimal strategies through interaction with game environments, enabling game characters or opponents to behave intelligently and adapt to changing conditions.

Recommender Systems

Neural networks are integral to recommender systems, enhancing personalized content recommendations. These systems employ neural networks to analyze user behavior and preferences, enabling collaborative filtering by modeling user-item interactions. By leveraging deep learning techniques, such as CNNs and RNNs, they extract intricate patterns from user data and content characteristics, improving recommendation accuracy. Real-time personalization and hybrid approach further refine suggestions, enhancing user experience and boosting business success in sectors like e-commerce and streaming services. Neural networks empower recommender systems to continually adapt to evolving user tastes and deliver more relevant, engaging content or product recommendations.

Challenges and Limitations of Neural Network

Neural networks, while powerful and versatile, come with a set of challenges and limitations:

- Data Dependency: Neural networks require large amounts of labeled data for training, which can be costly and time-consuming to acquire. In domains with limited data, models may underperform.

- Overfitting: Neural networks are prone to overfitting, where they perform well on the training data but poorly on unseen data. Regularization techniques are necessary to mitigate this issue.

- Computational Demands: Training deep neural networks demands substantial computational resources, including high-performance GPUs or TPUs. This limits their accessibility for smaller organizations and resource-constrained environments.

- Interpretability: The “black box” problem arises from the difficulty in interpreting neural networks due to their complexity. It might be difficult to comprehend how they make decisions, particularly in situations when openness is essential.

- Ethical Concerns: Biases present in the training data can be learned and perpetuated by neural networks, leading to unfair or discriminatory outcomes. Ensuring ethical and unbiased AI is a significant challenge.

- Scalability: Scaling neural networks for large datasets can be complex, and issues like vanishing or exploding gradients can hinder training in deep architectures.

- Hyperparameter Tuning: Selecting the right hyperparameters for neural networks can be a time-consuming and iterative process, often requiring expertise or automated hyperparameter search techniques.

- Limited Understanding of Inner Workings: While neural networks can achieve impressive results, their inner workings often lack a deep understanding. Scientists are still trying to figure out why they function and how to make them better.

- Hardware Restrictions: As deep learning requires ever-more-powerful computational resources, developing effective hardware for neural network training and deployment remains a difficulty.

- Generalization Issues: Neural networks may not generalize well to different data distributions or unseen scenarios, making them sensitive to variations in the input data.

- Long Training Times: One of the challenges we face with networks is that training them can be time consuming, which becomes impractical when we need time or near real time decision making.

Despite these challenges and limitations neural networks continue to drive progress in fields. Ongoing research aims to overcome these issues and make neural networks more accessible and reliable, for a range of applications.

Conclusion

In conclusion neural networks represent a groundbreaking advancement in the field of intelligence and machine learning. Inspired by the structure of the brain these networks have revolutionized how we process and comprehend data leading to applications such, as image and speech recognition natural language understanding, recommendation systems and much more.

While we’ve explored their incredible potential, it’s crucial to acknowledge the challenges and limitations, from overfitting and data quality to interpretability and ethical concerns. These challenges highlight the significance of development and ongoing research, in the field of AI to overcome these barriers.

As we gaze into the future neural networks will undeniably continue to push the boundaries of what can be achieved fostering innovation and reshaping our interactions. Embracing these advancements while considering their practical consequences will be crucial in harnessing the potential of neural networks within a rapidly evolving AI landscape. So, let’s remain curious stay well informed and actively participate in the journey, towards a more interconnected future.

Frequently Asked Questions (FAQs)

What are some limitations associated with networks?

Neural networks come with constraints, which include:

1. Overfitting: They can become too specialized in the training data.

2. Data Dependency: They require large, high-quality datasets.

3. Lack of Interpretability: They can be challenging to understand and interpret.

4. Computational Demands: Training deep networks demands significant computing power.

5. Ethical Concerns: They can perpetuate biases present in training data.How do neural networks differ from traditional algorithms?

Neural networks differ in that they can learn directly from data rather than relying on explicit, rule-based programming. They are highly proficient, in managing nonlinear connections within data, which makes them well suited for tasks like recognizing images and processing natural language.

Are there any restrictions on the complexity of networks?

Although neural networks can become incredibly intricate there is no cap on their complexity. Nevertheless, practical limitations such, as hardware constraints and training duration impose boundaries on their intricacy.

How do neural networks impact job automation?

Neural networks, along with AI in general, have the potential to automate a wide range of jobs, particularly those involving repetitive, data-driven tasks. They can lead to increased efficiency but also raise concerns about job displacement, necessitating reskilling and adaptation in the workforce.

Can neural networks exhibit biased behavior?

Yes, neural networks can exhibit biased behavior if they are trained on biased data. Biases present in the training data can be learned and perpetuated by the model, leading to unfair or discriminatory outcomes. Addressing these biases is a critical concern in AI development to ensure ethical and unbiased behavior.